machine vision

February 21, 2019

Ever had the urge to check for messages on your phone while refueling your car? You know that’s probably not a very good idea as your mobile phone, in a cloud of gaseous vapour, could trigger an explosion. I took out my phone (could not resist) recently at a local unmanned fuel station while topping up and to my surprise the pump stopped. I put away my phone and restarted fueling. Then I took it out again to see if the pump would stop again and indeed, the pump stopped again. The only way to make that work, is if the security camera would have spotted the phone and a signal was sent to the pump. This is a perfect example of machine vision. Let me explain how that works.

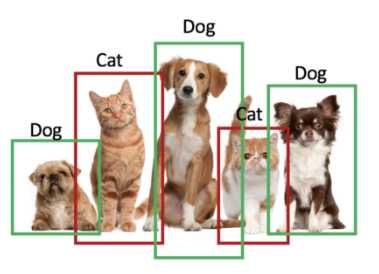

Machine vision is another name for image recognition by computers without describing all the rules. A simple example is recognising pictures of cats and dogs on the internet. Cats and dogs may not be that exciting, but think of recognizing them when you are in a self-driving car…

illustration of recognising cats and dogs

It would be impossible to give a computer all the rules for recognising cats or dogs as there are simply too many possibilities. This is where machine vision comes in.By labeling thousands of cat or dog images, it is possible to ‘train’ a computer how to recognise a cat or a dog, simply by its characteristics. Most training is done using (convolutional) neural networks (CNN). A CNN is a type of neural network very suitable for images, as it breaks down the images in components that can be reused, like the shape of eyes, corners, transitions etc. If a computer can learn the characteristics of cats and dogs, it can also learn to recognise mobile phones. By feeding a neural network with thousands of pictures of people with mobile phones (at unmanned fuel stations) it is possible to recognise when a customer is checking his phone while filling up.

It is also possible to feed pictures of mobile phones on top of a variety of backgrounds (syntetic pictures), so that the neural network will pick up the typical shape of mobile phones, regardless of the background.

If taking thousand of pictures of mobile phones puts you off, you’ll be pleased to learn that someone somewhere in the world already did this. And even better, in an open source world, pretrained neural networks for a wide variety of objects are freely available already.

One example of this is COCO (Common Objects in Contexts). COCO has trained neural networks for some 80 common objects like people, cars, back packs, fruit and…mobile phones.

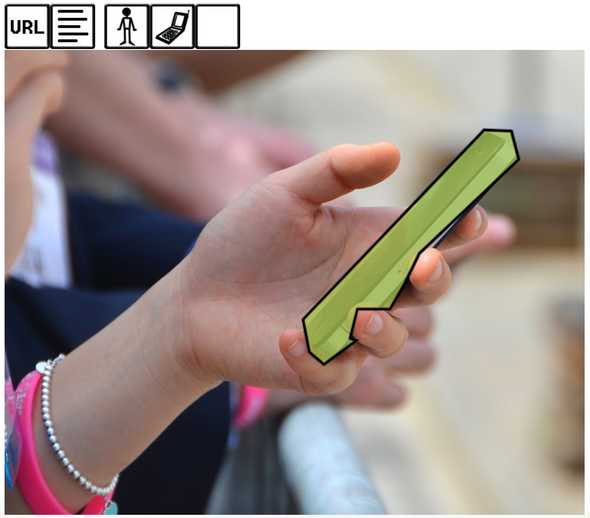

Original picture of person holding mobile phone.

Mobile phone detected by COCO.

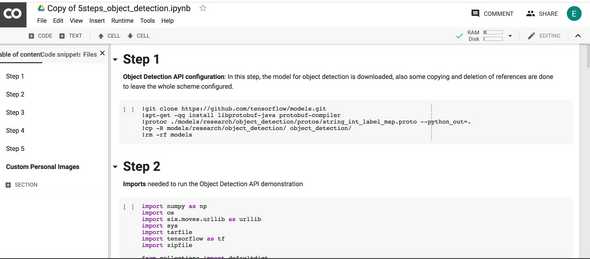

Another open source initiative on object detection is the TensorFlow Object Detection API. I mention this one as it easy to setup in Google’s Colab environment, which is available (incl. free GPU’s) for everyone with a google account.

First, you need to understand that video is just a series of pictures in real time (called frames). Each frame would have to be prepared to ‘flow’ through the trained neural network and the output of each run is a list of detected objects. In case a mobile phone is detected, a signal has to be sent to the pump’s control logic to switch off.

I thought it would be nice to show you how you can code the first part, i.e. take a picture yourself holding a mobile phone and then run it through a neural network to see if it detects it.

As a true engineer, I rather copy something good than invent something poor. With help of google I found this article on how to setup object detection in Colab and plug in your own images (article on Medium).

I simply downloaded the Colab Notebook to my Google Drive and opened it using Colab. You have to make sure you mount your Google Drive to Colab (which is explained here).

Screenshot of Google Colab.

To work on your own pictures, store them in a folder in Google Drive and update the path in section 5 of the Colab Notebook. After running the model you should see your own picture with detected objects highlighted.

This is a very simple example using a single frame, but you can also set this up for video. I hope you can now understand how the pump could be switched off in the unmanned fuel station when the security camera spots you picking out your mobile phone.

My own object detector correctly identifies my mobile phone.